How Does Live Streaming Platform Work?

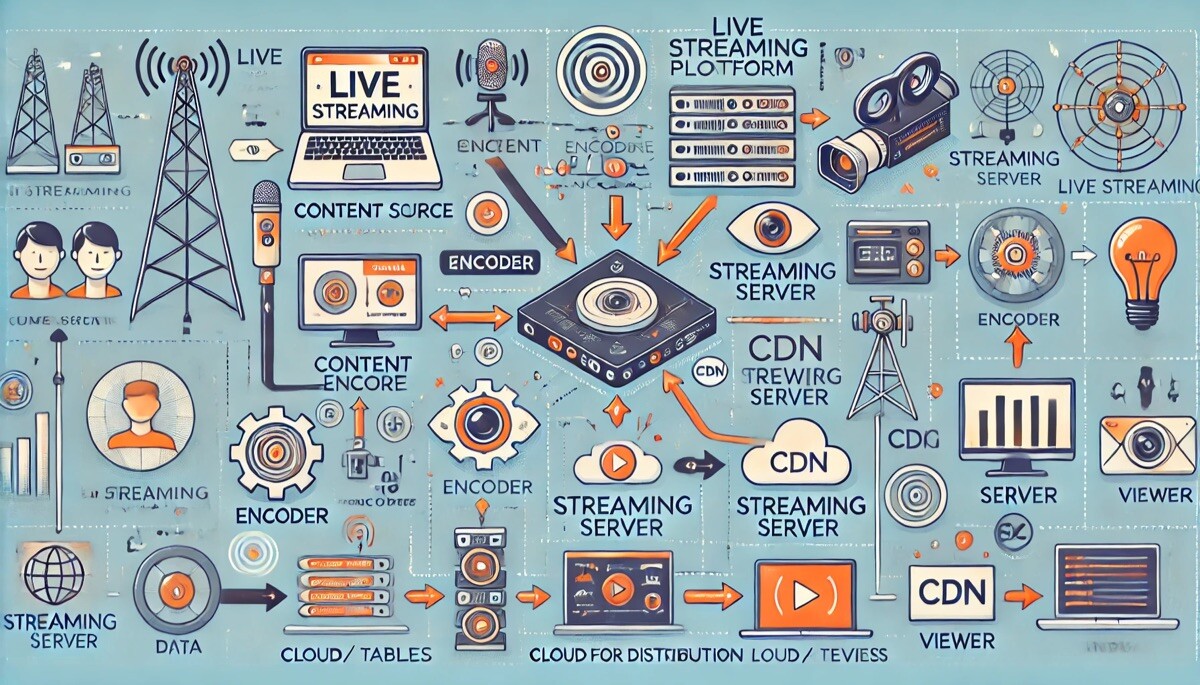

Live streaming has become an integral part of modern content sharing, particularly on platforms like YouTube and Twitch. The process of live streaming is complex and involves several intricate steps that span from the streamer’s device to the viewer’s screen. This journey includes encoding video content, transmitting it over the internet, and finally delivering it to viewers with minimal delay.

Streamers use encoders to send video data in a format suitable for processing by platforms like YouTube. Here, different transport protocols play a crucial role, with RTMP being the most widely used. However, newer options like SRT are emerging, promising lower latency. Once the video reaches the platform, it gets processed into multiple quality levels to suit various viewer connection strengths using methods like adaptive bitrate streaming. The processed video is then made available in formats such as HLS and DASH and delivered to the audience.

Key Takeaways

- Live streaming involves complex video encoding and transmission steps.

- Platforms use adaptive bitrate streaming to fit various viewer needs.

- Newer protocols like SRT aim to reduce streaming latency.

Live Streaming Challenges

Live streaming involves sending video content over the internet nearly instantaneously, which requires a lot of computing power. Processing video quickly can be demanding for computers. Since videos are large files, sending them takes time, making live streaming complex.

To start a live stream, the streamer uses video and audio devices connected to an encoder. The encoder prepares the stream to be sent using a protocol, often RTMP (Real-time Messaging Protocol). RTMP has been around for a while and was initially used with Adobe Flash. Some streamers might use RTMPS, a secure version of RTMP. A newer protocol, SRT (Secure Reliable Transport), is also emerging. SRT, based on UDP, aims for lower delays and performs better in poor network conditions. Yet, many platforms have not adopted it.

Point-of-presence servers help improve the streamer’s upload conditions. These servers are available globally, and the streamer connects to the nearest one, often chosen automatically. After reaching the server, the stream travels on a quick and reliable network for processing. The processing is mostly about offering the stream in different qualities. Video players then select the best quality based on the internet connection and adjust if needed. This operates using adaptive bitrate streaming.

Incoming video streams are transcoded into various qualities. This task requires considerable computing power. The streams are then divided into small segments, called segmentation. These segments are converted into formats understood by video players. HLS (HTTP Live Streaming) is the most popular format, consisting of a manifest file and video chunks. The manifest, which is a directory, guides players on loading the segments. These formats are stored by a CDN to reduce the delay from stream to viewer. DASH (Dynamic Adaptive Streaming over HTTP) is another format used, though it is not supported by Apple devices.

Streamers and platforms can adjust settings to improve or reduce stream delay. Sometimes, platforms offer settings that let streamers choose how interactive they want their stream. Then, the platform adjusts the quality based on that choice. To minimize latency, streamers should optimize their equipment setup.

From Streamer to Viewer

Starting the Live Broadcast

The live-streaming process begins when the streamer activates the broadcast. This can involve using various audio and video sources connected to an encoder, such as the commonly used open-source OBS software. Platforms like YouTube even offer simple ways to stream directly from a web browser with a webcam or from a smartphone camera. To ensure seamless video delivery across different devices, platforms often utilize specialized multi-platform OTT applications that support adaptive bitrate streaming and broad compatibility.

Encoding and Transmitting Protocols

The encoder’s role is to package the video stream and send it using a transport protocol that the streaming platform can process. The most widely used protocol is RTMP (Real-Time Messaging Protocol), a TCP-based protocol originally developed for Adobe Flash. Encoders also support RTMPS, a secure version of RTMP. There is a newer protocol called SRT (Secure Reliable Transport) which operates over UDP, offering lower latency and better performance in less favorable network conditions, though it is not widely supported yet.

Linking to Nearby Servers

To optimize upload conditions for the streamer, live streaming platforms provide point-of-presence servers around the globe. Streamers typically connect to the server that is nearest to them, which usually occurs automatically using DNS latency-based routing or an anycast network.

Backbone Network Delivery

After the stream reaches the point-of-presence server, it is swiftly transmitted across a reliable backbone network to the platform for additional processing. The objective here is to deliver the video stream in various qualities and bitrates so that modern video players can automatically adjust based on the viewer’s internet connection quality. This dynamic adjustment is known as adaptive bitrate streaming.

Processing Steps on Streaming Platforms

Dynamic Quality Video Transmission

Streaming platforms use a technology called adaptive bitrate streaming. It lets video players adjust quality automatically. This means that the video resolution and bitrate change based on the viewer’s internet speed. If the network slows down, the player can switch to a lower quality quickly.

Converting Videos for Streaming

Transcoding is a process where the platform changes the video stream into different quality levels. It converts incoming streams into various resolutions and bitrates. This requires a lot of computing power because multiple formats are often prepared at the same time.

Breaking Videos into Smaller Pieces

Segmentation involves splitting the video into small parts. These are only a few seconds long. The segmented video makes it easier to deliver over the internet. Segments are easier to manage and stream to viewers smoothly.

Wrapping Segments for Compatibility

After segmentation, the video parts are packaged into formats that video players can read. A typical format is HLS (HTTP Live Streaming). It includes a manifest file that tells players where to find video chunks. Another format used is DASH (Dynamic Adaptive Streaming over HTTP), though it isn’t supported by all devices. This step helps ensure that the viewer gets a steady stream on their device.

Delivery of Content and Delays

Storing Data in Content Networks

Content Delivery Networks (CDNs) play a key role in delivering live streams to viewers efficiently. When a stream is live, the signal goes from the broadcaster to a server nearby. This helps keep the upload fast and stable. Once there, the stream moves to a central server, where various versions suitable for different internet speeds are made. Adaptive bitrate streaming allows video players to adjust the stream quality based on network conditions. By placing data in CDNs, the wait time for viewers, also known as “last-mile delay,” is minimized.

End-to-End Delay

“End-to-end delay” refers to the time it takes from when a video stream is captured until it is viewed on the screen. This time is usually about 20 seconds or less. Factors that can affect this period include encoding and the protocols used, like RTMP or the newer SRT. Secure Reliable Transport (SRT) is gaining attention for its lower delay times despite most platforms still relying on the older RTMP. The ability to tweak these delays exists but often involves compromising the stream’s quality. Hence, selecting the right equipment and settings is vital for broadcasters to ensure minimal delays from capture to display.

Quality and Interactivity Tuning

Live streaming platforms like YouTube and Twitch strive to balance video quality and interactivity. The core task is to achieve low “glass-to-glass” latency, which is the time it takes for video to travel from the streamer’s camera to the viewer’s screen. This latency is often around 20 seconds but can be reduced by sacrificing some video quality.

Most streamers use software encoders, like OBS, to send their broadcasts over the internet. The encoder’s job is to package the video stream into a format that platforms can process. While Real-time Messaging Protocol (RTMP) is widely used for sending streams, a new protocol called Secure Reliable Transport (SRT) promises lower latency, although it still isn’t universally supported by popular platforms.

Adaptive Bitrate Streaming:

- The platform transcodes video into various resolutions and bit rates.

- Video is broken into segments lasting a few seconds.

- The video player adapts to the viewer’s internet quality, switching bit rates as needed.

Streamers connect to nearby servers for better upload conditions, helping video travel quickly. These servers use DNS routing or anycast networks to direct the stream through reliable paths. Once it reaches the main platform, the stream is transcoded and segmented to suit different devices and connection qualities.

The output formats, like HLS and DASH, are then ready for delivery. HLS involves a manifest file and video chunks, while DASH is another format option, though not supported on all devices. Throughout this process, content delivery networks (CDNs) cache data to reduce delivery time to the end viewer, ensuring they get the best experience possible.

Streamers’ Techniques for Improving Performance

Streamers use various strategies to enhance their live streaming quality and minimize latency. One key factor is their choice of encoder. Many opt for software like OBS to package their video and audio into a streamable format. This choice can impact the efficiency and quality of the broadcast.

Transport protocols play an essential role in reducing latency. While RTMP is widely used, newer protocols like SRT offer lower latency and are more resilient in poor network conditions. However, these are not yet broadly supported by popular platforms.

To ensure smooth streaming, platforms provide access points, or point-of-presence servers, around the world. Streamers connect to the nearest server, helping to reduce the time it takes for data to travel from their device to the viewer.

Adaptive bitrate streaming is an important feature. Platforms automatically adjust video quality based on the viewer’s internet speed. This ensures a seamless experience, even if the connection varies.

Streamers who focus on optimizing their local setup can achieve better results. Reducing the time it takes for video to travel from the camera to the platform is crucial for maintaining low “glass-to-glass” latency, which encompasses the entire video delivery process.

Final Thoughts

Live streaming platforms like YouTube and Twitch offer a way for streamers to share video content with viewers in real-time. The process starts when the streamer connects their audio and video sources to an encoder, which compresses the data and sends it through the internet using protocols like RTMP.

Next, the stream is sent to a nearby point-of-presence server, ensuring optimal upload conditions. From there, it’s transmitted across a fast network, reaching the platform for more processing. Here, the stream is transcoded into different resolutions and bit-rates, then divided into smaller segments. Formats like HLS are used during this packaging stage to ensure compatibility with video players.

The processed video content is cached by CDNs to reduce latency before reaching the viewer’s device. On average, the “glass-to-glass” delay is about 20 seconds. To adjust latency, platforms can tune certain aspects, affecting the stream’s quality. Overall, streamers can mainly improve latency by optimizing their setup from the starting point itself.